Latency of On-board Sounds

-

I am wondering if the developers of the Sylphyo (@join ?) could provide some info on the latency of the on-board sounds.

I am wondering how it might compare with wind synths that are not based on MIDI. I have not had the opportunity to play a Lyricon or a CV-based controller tied into a modular synth, but the reports my Matt Traum and Pedro Eustache are that the experience is in another realm (the sound "coming directly out of my mouth" and all that).

Wondering how synth sounds from the Sylphyo compare with those setups ...

Maybe you could briefly describe the signal path internal to the Sylphyo??

-

@clint

The Sylphyo is always a bit late. I'm pretty sure it's due to the physical implementation of the pressure sensor. With most other wind controllers you blow into a very small enclosed space where pressure builds up very quickly. The Sylphyo lets air through and measures somewhere on the side. Even if you completely plug the bottom hole, the pressure chamber, the entire tube, is still way too big to get close to the almost percussive sounds that are possible with other wind controllers.This slowness translates into a longer attack phase (I wouldn't call that latency) and further translates into unrivaled low- and mid-pressure sensitivity. Maybe that was the basic idea for the instrument. Anyway, that's the only reason I play it. I don't know of any other wind controller that controls the sound with airflow alone as well as the Sylphyo.

Conclusion?

Well, maybe the same as with your native flutes. You know each instrument and what it does. Why do you have more than one flute? Why do you have only one wind controller?—

Clint, I would like to repeat your request, maybe '@join' will hear us at some point:

Aodyo, maybe you could briefly describe the signal path internal to the Sylphyo?Give us a block diagram. It would really help us to understand the whole system.

-

@peter-ostry Excellent analysis. I now understand this much better.

The Sylphyo is my only wind controller. I chose it because of compatibility with Native American flutes ... now I understand why!!

-

I'm carving a few minutes from my vacation time to type this, so there might be more details later on (and answers to other posts as well).

As @Peter-Ostry said, there will always be a fundamental difference in how pressure builds up in the Sylphyo compared to other closed-ended wind controllers, and this might translate into a slight amount of latency in the attacks. As for how it impacts perception, it looks like it's a very personal appreciation. Some don't like it, but it seems more due to how they prefer the tradeoff offered by the other solutions. Some prefer it that way and even say it's allowed them to feel less latency. For instance, one of our artists, Florian Becquigny, quit using his EWI for very nervous pieces because he much prefers how responsive the Sylphyo is in terms of latency. He doesn't seem to care much about the sensitivity aspect of it, so he always plays at very high pressure and completely plugs the bottom hole.

As for the comparison to a Lyricon setup, the latter should offer objectively lower latency (especially on an all-analog system), but again I'm not sure the perceived difference is that important for everyone.

Here's a brief overview of the pipeline involved in turning your breath into sound on a Sylphyo playing its internal synth over headphones.

First, the Sylphyo gathers samples from the breath sensor at 15 kHz, which it then turns into a filtered 1 kHz signal. Keys and other sensors have a lower rate, especially the keys as it often includes the configurable delays that are there to make the thing playable for a human being (but Florian Becquigny, for example, disables all of them because he needs to play pieces at extreme speeds, much than the EWI allows him, and he has done the practice to be able to play like this).

But let's focus on the breath for now. As soon as a breath sample is available, it is sent to the internal synthcard in a "state packet" that takes a bit more than half a millisecond to travel. Once received, it is made available for the internal synth engine, which operates with a fixed 1ms buffer and transmits 48 kHz audio to its codec.

You should add some time to account for CPU interrupts, context switching, and other high-priority concurrent processes, but it shouldn't add much to the overall latency as individually these things are counted in nanoseconds or microseconds.

The path to the Link receiver is obviously a bit more complex due to the fact the data must go to the radio package, over the air, then from the Link's radio package to its main board, and finally to the Link's internal synth, thus latency increases a bit.

Overall, the pipeline was pretty much designed for low latency, and we had to make significant departures from off-the-shelf peripheral driver designs to get there, so I'm not sure even an Arduino-based controller with CV output would fare better.

(I'm not sure my colleagues would be OK with the amount of info disclosed here, so I might redact some parts of this in the future)You should also factor in that perceived latency will also depend on what kind of sound you play; i.e., what's the synth doing. Envelopes, delays, internal debouncing mechanisms in percussive oscillators might well be more impactful in terms of latency compared to the software pipeline I've described above.

And you should also note that digital synthesis will always respond a bit "behind" compared to analog, because in the digital world you have to deal with a finite sampling rate and resolution, aliasing and other unwanted effects whose mitigations always incur a bit of latency (for instance, smoothing a filter's cutoff frequency). This can be solved with more processing power, which leads to more expensive instruments, or with a simpler synth architecture and more limited sounds.But overall, the difference between a Sylphyo played over headphones and a Lyricon shouldn't amount to much compared to an average human's just-noticeable-difference in latency perception.

I think we're already crossing a qualitative threshold between a square wave played through the MIDI-USB-Computer path and the same square wave played through the internal synth, as "it feels more like it's coming directly out of my head".

I bet the full-analog Lyricon experience is even better and more enjoyable, but I don't expect it to be "a whole another step" better for a majority of people.

To me, it depends on individual perceptual experience much more than everything else.The same story seems to repeat in various different fields. When I was doing research on the accuracy limits of the perceptual-motor system, prior to Aodyo, we found that it was pretty much impossible to come up with a formula that would work for everyone. Even without prior training, we had people who could easily do and perceive the equivalent of surgeon work using a high-resolution mouse, while others weren't able to fully utilize the resolution of a basic 2000's-era office mouse.

Peter's suggestion to look into your acoustic instruments is a good one. Sure, once the air is moving, reaction is pretty much instant and action-perception coupling is ideal, but there are a few things that incur latency even in an acoustic context, such as the time to build up air pressure, the key mechanisms if they exist, etc. And we don't necessarily perceive them, or at least we can adapt to them.

More than a decade ago, I worked on a video-based computer music system where latency varied greatly due to the fact the input device was a noisy webcam. In this case, the solution was to add latency so as to arrive at some outrageous number, but it was much easier to perform with it, because latency was predictable and always the same and the body knows how to adapt and anticipate. That human process of anticipation is even the modeling basis of the best score-following systems (used to accompany the varying tempo and expression of a live musician with a predetermined score).Digressions apart, we could progress towards lower latency by shuffling the software around and maybe making different tradeoffs. I'm not sure if there have been big regressions since the earlier firmware versions, but if there are then we would have an easy first step: correct them :).

-

Mhm, not sure if I understand everything correctly:

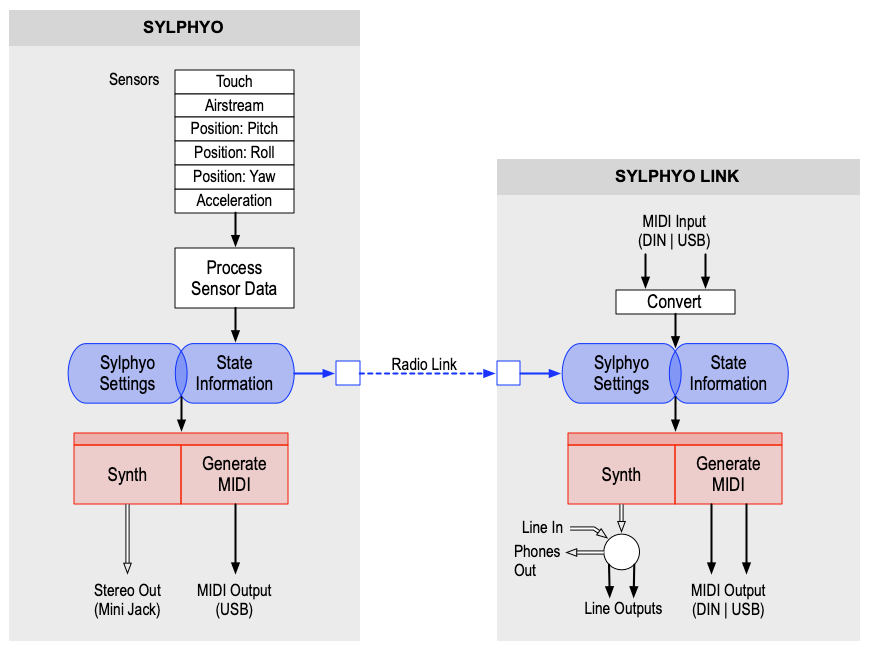

The sensor data get processed, then manipulated/scaled by the settings, then they feed the internal synth and the sound goes out via the audio jack. From the same data MIDI gets generated in parallel and is sent out via USB.

If we use the Link box, we get basically the same control data as the Sylphyo-Synth over the radio link and the Link-Synth plays exactly the same.

Is this correct?

Is it correct, that MIDI is generally independent from the synth?... and Link-MIDI is generated inside the Link box, from the received synth-control-data, or does it come via radio?

... and the Link knows nothing about the settings we have in the Sylphyo but rather relies on readily processed control data for it's synth?

... and when we send data to the Link's MIDI input, they go through a special interface which converts MIDI to synth-control-data but probably "wrong" because the Link does not know about Sylphyo settings?

Ojojojoj ... I wanted to draw a rough block diagram but the longer I think about the system the more I see that I know nothing ;-)

-

The Link gets state information as well as the current Sylphyo settings, and with those settings the same piece of software turns that state into MIDI in the Sylphyo and Link.

-

Ok, I think I got it.

Is this diagram acceptable for a rough overview?

-

Yup, that's about right!

-

@join OK, this is excellent. More than the details, it gives me a whole new perspective on latency ... (but the details are cool too!)

Thank you !!!

(and thanks @Peter-Ostry as well)

It also explains why I saw significantly different MIDI coming out of the Link vs. the Sylphyo USB port for the same input ...

-

Referenced by

Peter Ostry

Peter Ostry